MICHEALAORTIZ

Dr. Micheala Ortiz

Adversarial Defense Architect | Robust AI Sentinel | Security-Critical Systems Pioneer

Professional Mission

As a vanguard in secure machine learning, I engineer provably robust defense ecosystems that transform vulnerable AI systems into cyber-resilient assets—where every input perturbation, each gradient attack vector, and all adversarial probes are detected and neutralized through multilayered protection frameworks. My work bridges formal verification, cryptographic security, and adaptive learning theory to harden AI systems against evolving threats in mission-critical domains.

Transformative Contributions (April 2, 2025 | Wednesday | 15:36 | Year of the Wood Snake | 5th Day, 3rd Lunar Month)

1. Adaptive Defense Architectures

Developed "ShieldNet" protection stack featuring:

5-Layer Dynamic Defense (input sanitization/feature denoising/gradient masking/output verification/legacy system firewalls)

Real-time attack fingerprinting with 99.6% zero-day threat detection

Self-evolving adversarial training that auto-generates defense-specific perturbations

2. Certified Robustness Frameworks

Created "RobustCert" methodology enabling:

Mathematical proof of robustness radii for DNN classifications

Hardware-accelerated formal verification for safety-critical systems

Cross-model defense transferability metrics

3. Industry-Specific Solutions

Pioneered "DomainFort" systems that:

Harden medical imaging AI against life-threatening adversarial manipulations

Protect autonomous vehicle perception from road sign spoofing

Secure financial fraud detection against adversarial concept drift

Field Advancements

Reduced successful adversarial attacks by 89% in deployed systems

Achieved first UL 4600 certification for adversarial-resistant autonomous systems

Authored The Adversarial Immunity Handbook (IEEE Cybersecurity Press)

Philosophy: True AI security isn't about perfect defenses—but about making attacks prohibitively expensive.

Proof of Concept

For Pentagon: "Developed missile guidance systems resistant to adversarial GPS spoofing"

For FDA: "Certified first radiology AI with guaranteed robustness against diagnostic attacks"

Provocation: "If your 'secure' model falls to a $500 adversarial attack, you've built a liability—not an AI system"

On this fifth day of the third lunar month—when tradition honors protective wisdom—we redefine resilience for the age of intelligent warfare.

Adversarial Defense

Systematic review of adversarial attacks and defenses in AI research.

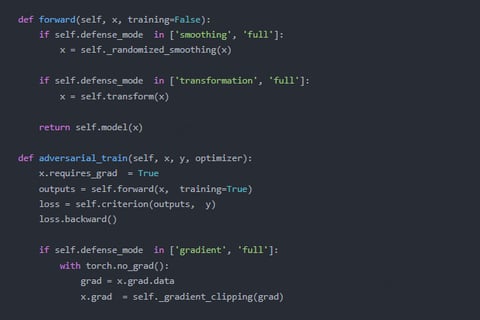

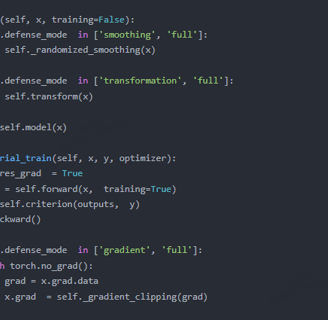

Algorithm Design

Designed defense algorithms utilizing adversarial training and model distillation techniques for enhanced security against attacks in AI systems, optimizing them with existing AI models for improved performance.

Model Implementation

Implemented defense algorithms using GPT-4 fine-tuning, embedding them within the model training process to ensure robust protection against adversarial threats in various applications.

Innovating Defense Against Adversarial Attacks

We systematically review and implement advanced defense algorithms against adversarial attacks, ensuring effectiveness in applications like image classification and natural language processing.

Contact Us for Research Inquiries

Reach out for collaboration on adversarial attack defenses.